What to do when your classification accuracy is too good

After spending a year in Indiana, I've now relocated to Pittsburgh, where I'm engaged in a new focus

area that involves applying machine learning to genomics and healthcare. I have a strong interest in

this field and hope to to pursue my PhD in this area.

My current position is with an academic startup called SignatureDX. Although it's technically not

affiliated with a university, the organization is primarily led by professors from the University of

Pittsburgh and Carnegie Mellon University, giving it a very academic 'feel'.

SignatureDX's research is focused on detecting certain fetal diseases, such as specific types of trisomies, using a less invasive approach than the conventional methods. There are limitations on what I can disclose about my work because we are in the process of applying for a patent. While the company continues to publish research, we cannot provide specific details about how the diseases are detected until the patent application is completed.

My current project involves developing an ensemble classifier. The lab has already devised a method that estimates the likelihood of a particular condition's presence. However, it lacks a multi-class classifier that can determine which condition is likely, or whether there's no condition present at all. To address this, I've employed three techniques:

- Mathematical Analysis: This method involves a mathematical analysis of the raw classification outcomes provided by the lab's method. It offers an accuracy of around 70%.

- Random Forest Classifier: I've employed a random forest classifier, which achieves over 90% validation accuracy.

- Neural Network Classifier: I've used a neural network classifier, also yielding over 90% validation accuracy.

As much as I would love to take credit for being a neural network genius who developed an ensemble classifier for disease detection with over 90% validation accuracy in only a few days, it is far more likely that it is too good to be true and I am missing something. So I thought it might be interesting to talk about techinques that are often used to figure out why the accuracy might be overly optimistic.

One approach is to use leave-one-out accuracy, where a single data sample is left out for validation, while the remaining data is used for training. This method helps detect overfitting and ensures the model cannot cheat by referencing specific subsamples within the sample data. It's especially valuable for smaller datasets.

Dataset augmentation is another technique I'm considering. While not applicable to all datasets, it's possible for ours. The detection method involves subsampling, leading to different outcomes each time. By running the classification method multiple times with varying outcomes, the results can be fed into the classifier. However, the most effective solution for addressing small datasets remains acquiring more raw data, which can be challenging in biological systems research.

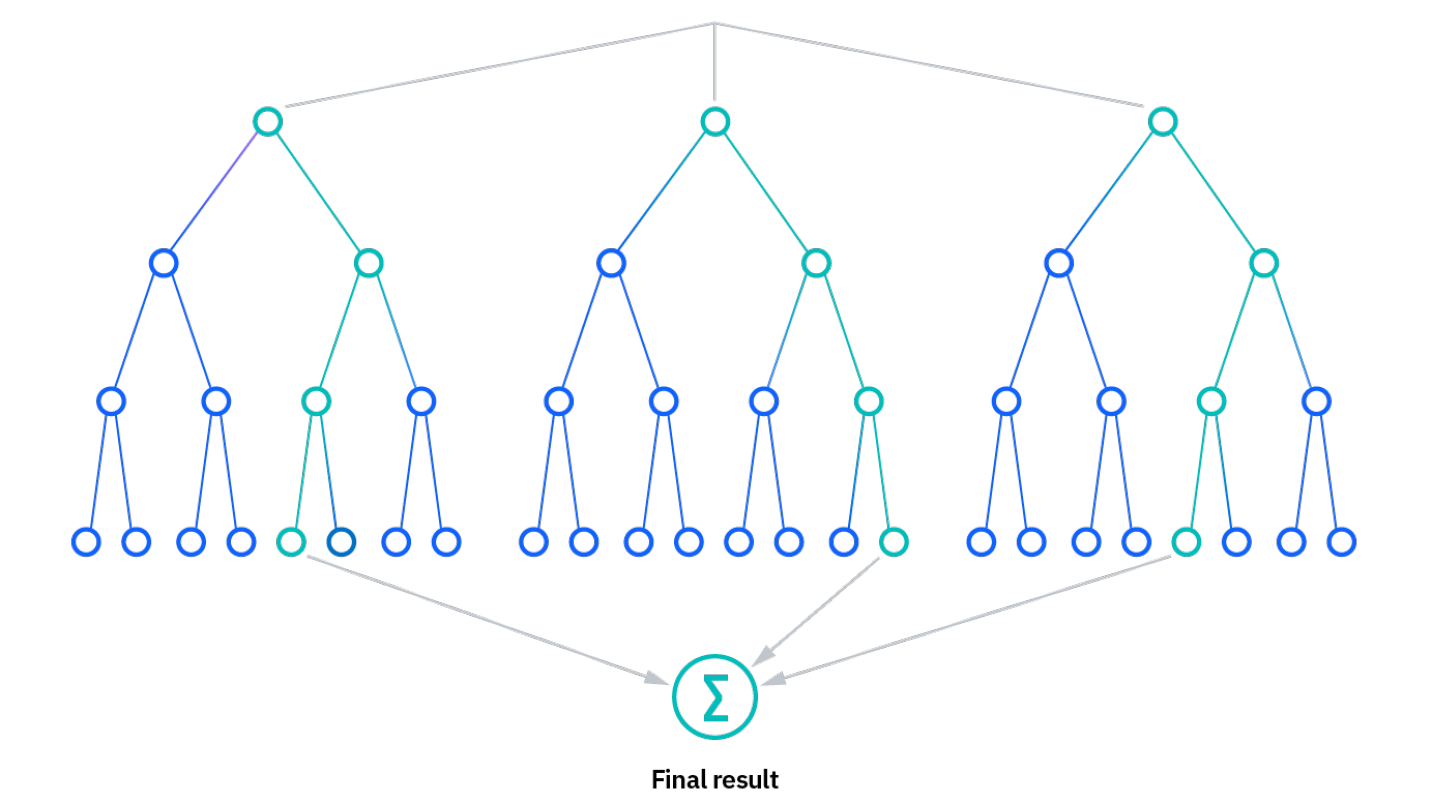

The third method, which is applicable to random forest classifiers but not to neural networks, revolves around exploring feature importance. Random forest classifiers comprise numerous decision trees, each generated from a different sample of the data. Subsequently, when new test data is introduced, each of these random forest trees makes a classification. Each tree casts a vote, and the classification with the highest number of votes emerges as the winner. If you opt for a random forest classifier, you have the opportunity to delve into which features are the most valuable, potentially providing insights into any underlying issues that may be present. For example, if 99% of the classifications are determined by only a few features, there may be an issue (or that could be correct, which would be interesting).

I hope that one of these techniques will shed light on why my results appear overly optimistic. This understanding will enable me to build a classifier that generalizes effectively and provides accurate results without false inflation.

May you be ever victorious in your endeavors! M.E.W